generative glitter is a weekly newsletter focused on how to use generative AI to work better—from theory to techniques. Let’s go!

Instead of focusing on the projected adoption of AI in every facet of our lives, consider honing in on what is unAI-able. […] actions, tasks and skills that can’t be digitized or automated.—Brandeis Marshall on Medium

Losses (and some gains)

In the news this week are reports about job displacement from generative AI. The Washington Post interviews two copywriters who have lost work, one who was previously employed full time by a small company and another who was a contractor and has since decided to take courses to become a plumber (“a trade is more future-proof,” he said).

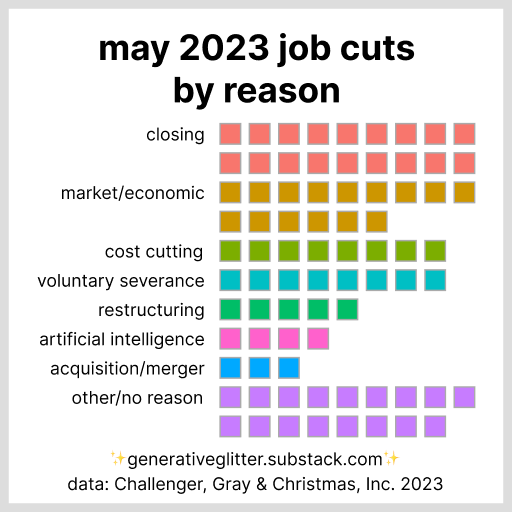

These are examples in a pattern that is detectable in job loss data. A new study by Challenger, Gray & Christmas, Inc., an outplacement company, shows about 5% of May 2023 job cuts were due to “artificial intelligence.” The fact that AI is even its own category on this list is wild. (See the other categories in the graph below.)

Meanwhile, The Wall Street Journal shares some cases of entrepreneurs and freelancers who have used generative AI to increase their productivity—ranging from marketing, design, and legal.

In the above examples, the authors note that most generative AI content is still not ready for “product prime time” without a human in the loop.

What could go wrong? Here is an important case-in-point that ties together many of the threads of this week’s issue: the US National Eating Disorder Association (NEDA) just pulled the plug on a new eating disorder hotline chatbot after it recommended “healthy eating tips” and suggested ways to lose weight to users (not cool, chatbot).

I don’t know whether this “black swan” event (apparently 1 in 2,500) could have been avoided with more context or product testing. But, it is a cautionary tale for high-risk situations where a human is not involved.

Skillset reset

Re-skilling has become a hot topic with the rapidly evolving AI landscape. How do we manage the influx of AI into jobs and adapt? One strategy is to lean into learning how to use AI. Another is to lean into learning how to do things that are uniquely human.

Jensen Huang, CEO of Nvidia, commented this week on this skillset shift and declared that, with generative AI, “[e]veryone is a programmer. Now, you just have to say something to the computer."

But, just because more people have access to the tools doesn’t mean they know how to use them. Kelsey Behringer, COO of an AI-powered writing education platform, writes in Fast Company about the role of educators in this shift. She explains how it is necessary to equip students with the skills to use generative AI responsibly, ethically, and effectively.

Other companies are jumping into the AI-learning wagon, too. Pearson, an education publisher, is developing tools for businesses to identify both people that need re-skilling and jobs that need reframing. In a recent interview their CEO, Andy Bird, gave an example: “if you’re an accountant, you already possess 25% of the skills you need to become a data scientist. […] you have some basic core competencies to take you from Job A to Job B.” This scenario is framed around helping people jump from one job description to another.

I’m interested in what this means for career tracks. If we’re all programmers, then the AI-fueled future is less about turning everyone into “data scientists” and more about redefining what it means to be an “accountant.”

An alternative (non-mutually exclusive) approach is to double down on the skills that are uniquely human. The quote at the top comes from Brandeis Marshall, who writes about the skills that are unAI-able. She highlights three categories:

Contextual awareness—since generative models struggle to understand and integrate various contextual factors, such as cultural, emotional, political, and social awareness.

For example, it is difficult for models to detect fake news and realize that an AI-generated image is not actually real based on its content or setting.

Conflict resolution—since generative models struggle to determine when not to respond (they always respond) or how to provide appropriate responses (since they often take biased stances).

For example, it is difficult for models to handle grey areas in a conflict.

Critical thinking—since generative models struggle to perform problem-solving, inference, and strategy.

For example, even if it is easy to generate code (since we’re all programmers now), it is difficult for models to judge the impact of that code on minoritized groups.

In a similar track to Brandeis’ ideas, Joe Procopio writes in Inc. about the opportunity to emphasize these human skills. With the explosion of AI-generated output, Joe theorizes that there is “a secondary effect that immediately increases the scarcity, and thus the value, of uniquely human knowledge, experience, and intuition.”

My concern with this perspective for knowledge workers: a few years ago it was unimaginable that AI could generate text and images as well as it does now. As the skill of AI models increases (see “some evidence” below), the list of “uniquely human” skills could continue to dwindle—which I guess could imply perpetual skillset pivots for knowledge workers.

But, the opportunity here is to help knowledge workers continue to learn new skills—regardless of if those are with or without AI.

Productization challenges

For those who have considered starting up a new generative AI product (guilty ✋), the road is tempting—OpenAI makes it so easy with their API, right?!—but it is far from smooth.

Phillip Carter, a product manager for a datastore query platform, writes an amazingly comprehensive overview of the challenges of building a product with an LLM. A tidbit:

a lot of that [AI] hype is just some demo bullshit that would fall over the instant anyone tried to use it for a real task that their job depends on. The reality is far less glamorous.

What makes it hard? He discusses a few challenges:

Tight context windows

Slow API response times

Prompting is “the wild fuckin’ west”

The input “space” is wide

Legal and compliance concerns are concerning

“Early access programs” are usually demo-ware of vaporware

Even if you get a product stood up (and maybe get some investors or early users), converting to a sustainable business is even more challenging.

Christian Owens, cofounder of a payment software company, writes in VentureBeat about the business challenges and potential solutions. Here’s my summary:

Challenges:

Monetization—profitability is difficult without a specific niche or integration strategy.

Differentiation—the AI market is crowded (like, really crowded; here’s a list of almost 5,000 products) and many startups lack a clear problem to solve.

Ethical and security concerns—the challenges of misinformation and privacy are hard to address.

Solutions:

Focus on customer needs—identify a known and understood customer problem to solve.

Plan for global scale—access a larger addressable market to ensure viability.

Build a monetization thesis—determine your value metric and refine the pricing strategy around this.

Some evidence

The AI shortcomings identified by Brandeis (above) are serious limitations.

As a small example of the effort to move beyond those limitations, a new study by researchers at Johns Hopkins University shows advances in “theory-of-mind (ToM) tasks”—i.e., tasks that require understanding beliefs, goals, or mental states.

They ran experiments with 20 example problems that require complex reasoning. First, they found that GPT-4 had 80% ToM accuracy in zero-shot settings (while GPT-3.5-turbo, the backbone of the public ChatGPT, only had 50% ToM accuracy, eeek). Human benchmarks had 87% ToM accuracy.

Then, they added conditions for in-context learning—step-by-step thinking and two-shot chain-of-thought—which bumped the ToM accuracy for GPT-3.5-turbo to 91% and GPT-4 to 100% (ummm… 🤯).

The take away, yet again, is that in-context learning is important for accuracy. Take note, NEDA.

—Aaron